Types of Non-Contact 3D Scanning Methods

2-1. Laser

a. Time-of-flight: (long range, less accurate)

A laser emits light, and the amount of time before the reflected light is seen by a detector is measured.

Known speed of light = Known round-trip distance.

A laser emits light, and the amount of time before the reflected light is seen by a detector is measured.

Known speed of light = Known round-trip distance.

b. Triangulation: (short range, more accurate)

A laser emits light, and a camera is used to look for the location of the laser dot (object).

(1) The known distance between the laser emitter and the camera and (2) The angle of the laser emitter corner. (3) The angle of the camera corner can be determined by looking at the location of the laser dot in the camera's field of view. With information (1)(2)(3), the distance between the laser and object can be calculated.

A laser emits light, and a camera is used to look for the location of the laser dot (object).

(1) The known distance between the laser emitter and the camera and (2) The angle of the laser emitter corner. (3) The angle of the camera corner can be determined by looking at the location of the laser dot in the camera's field of view. With information (1)(2)(3), the distance between the laser and object can be calculated.

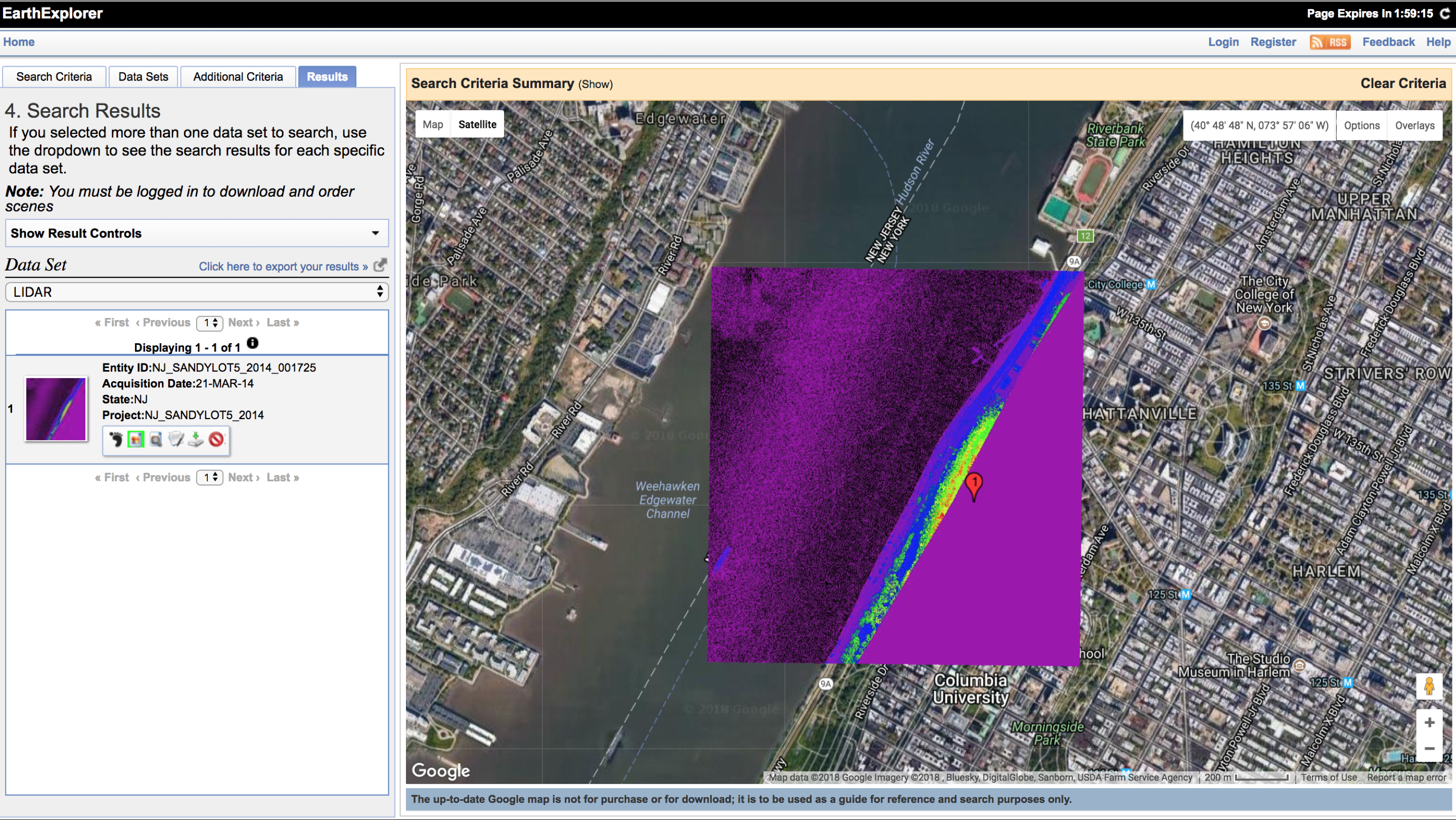

For example, LiDAR (Light Detection and Ranging) measures how long it takes for the emitted light to return back to the sensor.

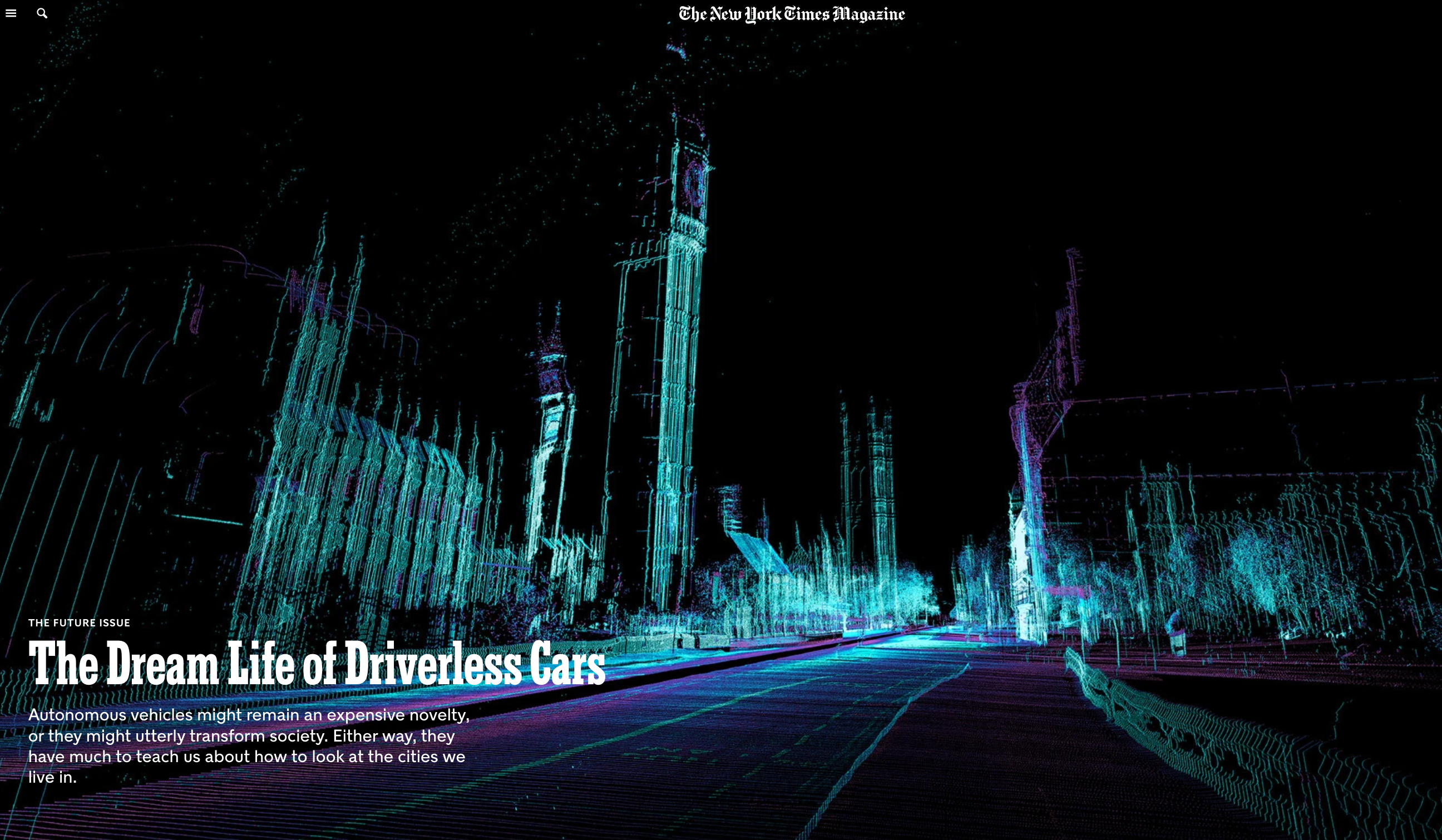

Dream Life of Driverless Cars, ScanLAB Projects for The New York Times

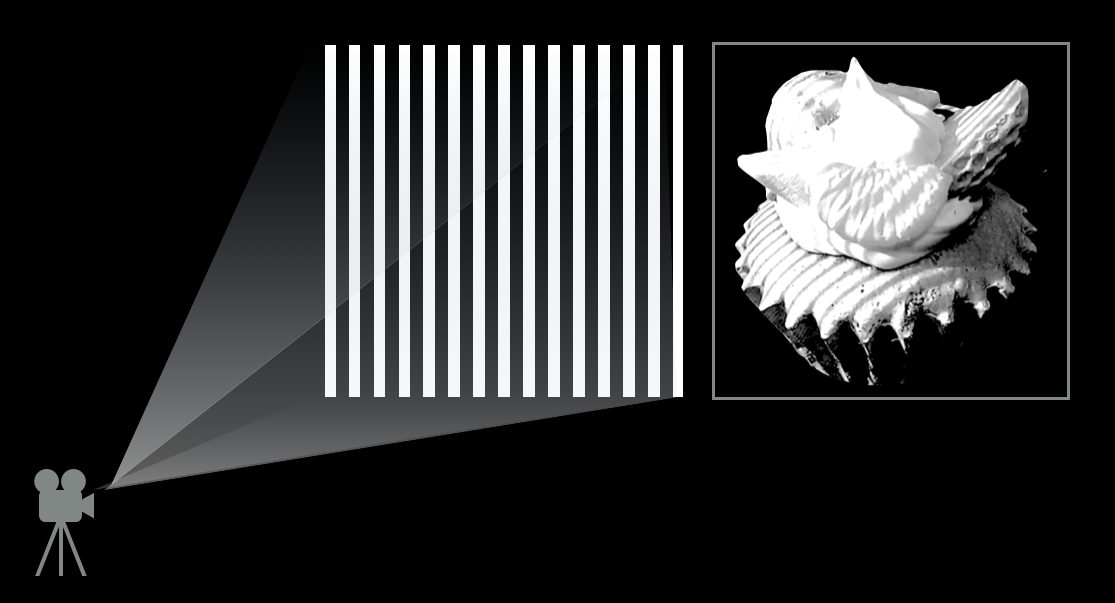

2-2. Structured Light

Structured light 3D scanners project a known pattern of light. The distortion of the pattern helps determine the shape of the object.

There are many different types of sensors that use Structured Light. Here you see a Artec Eva handheld scanner used in the video The President, in 3D by the Smithsonian.

A high-res digital model resulting from the structured light sensor used in The President, in 3D by the Smithsonian.

Another example of handheld scan using Shining EinScan scanner.

Hardware

Structured Light scanners like above cost around $7k (Shining EinScan) to $20k (Artec Eva). The software is usually proprietary. We will focus on (comparably) affordable Structured Light sensors.

For example, you can find a used Kinect for about $20 online (the model number at the bottom of the scanner should be “1414”).

Kinect has (1) an IR Projector to project IR light, (2) IR Receiver to see the IR light, and (3) RGB Camera to give 3D model color information.

Kinect has (1) an IR Projector to project IR light, (2) IR Receiver to see the IR light, and (3) RGB Camera to give 3D model color information.

However, the resolution of Kinect’s RGB camera is about 640px × 480px.

Studios like Scatter created DepthKit to replace the RGB camera with a DSLR.

Studios like Scatter created DepthKit to replace the RGB camera with a DSLR.

Structure Sensor is another lower cost option (around $300 to $500).

The iPhoneX also has an IR Projector and IR Receiver for FaceID.

Software

Desktop

SKANECT:

Windows, macOS (supports Structure Sensor, Kinect)

ReconstructMe:

Windows (supports most sensors)

Apps

Itseez3d: IOS, Android (supports Structure Sensor, RealSense)

Canvas.io: IOS (supports iPhoneX build in sensor)

SKANECT:

Windows, macOS (supports Structure Sensor, Kinect)

ReconstructMe:

Windows (supports most sensors)

Apps

Itseez3d: IOS, Android (supports Structure Sensor, RealSense)

Canvas.io: IOS (supports iPhoneX build in sensor)

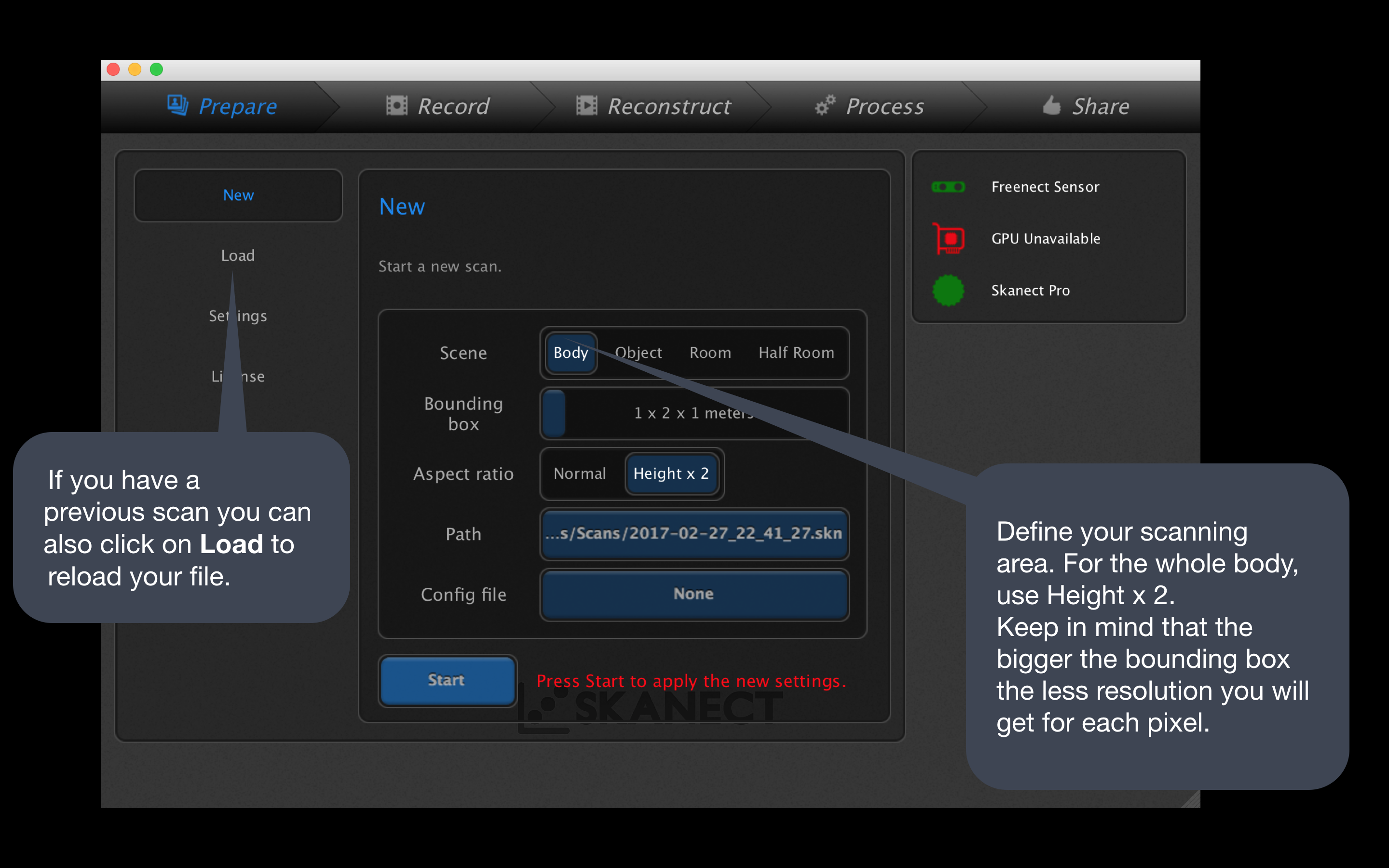

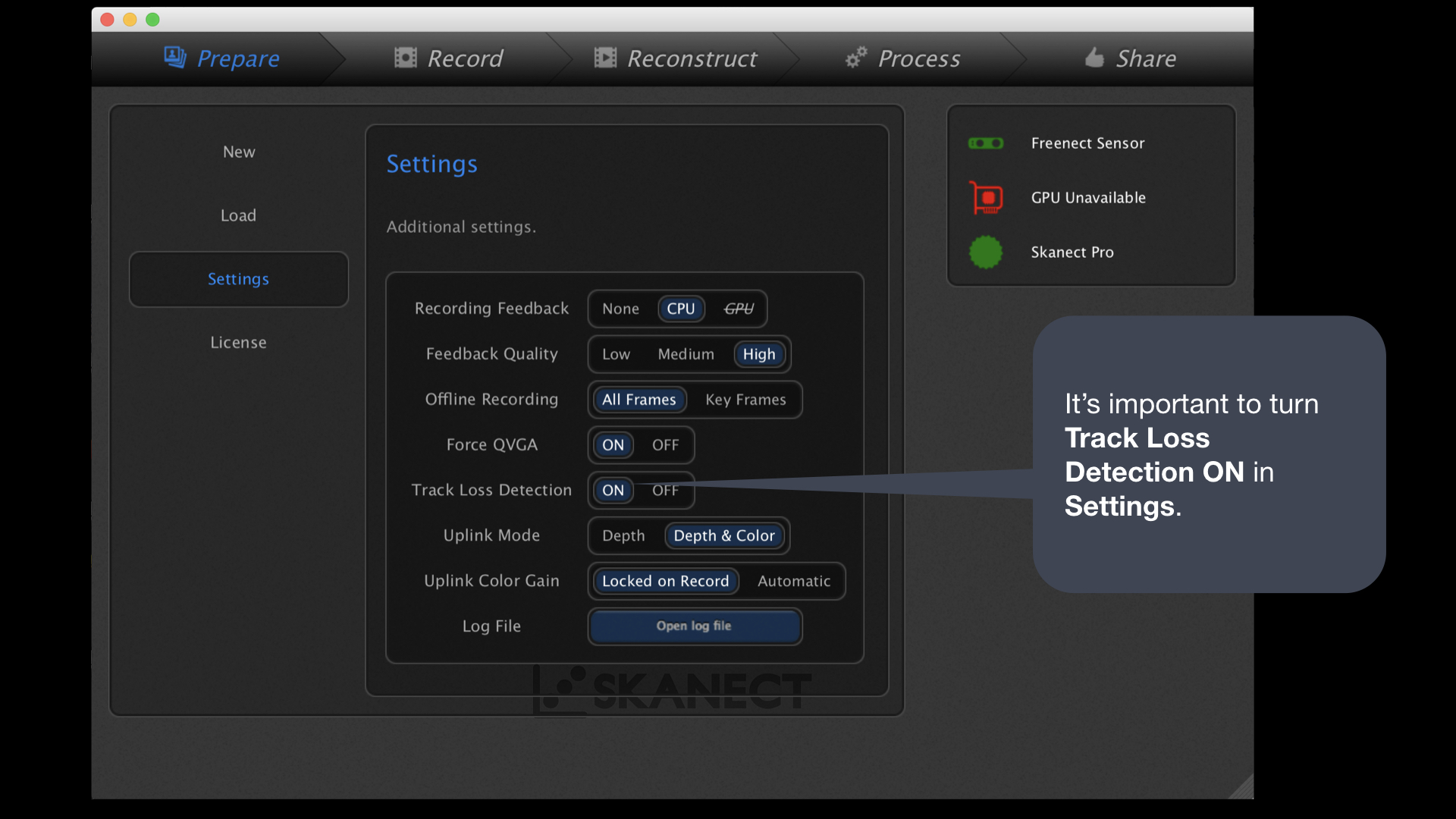

Tutorial (click to zoom)

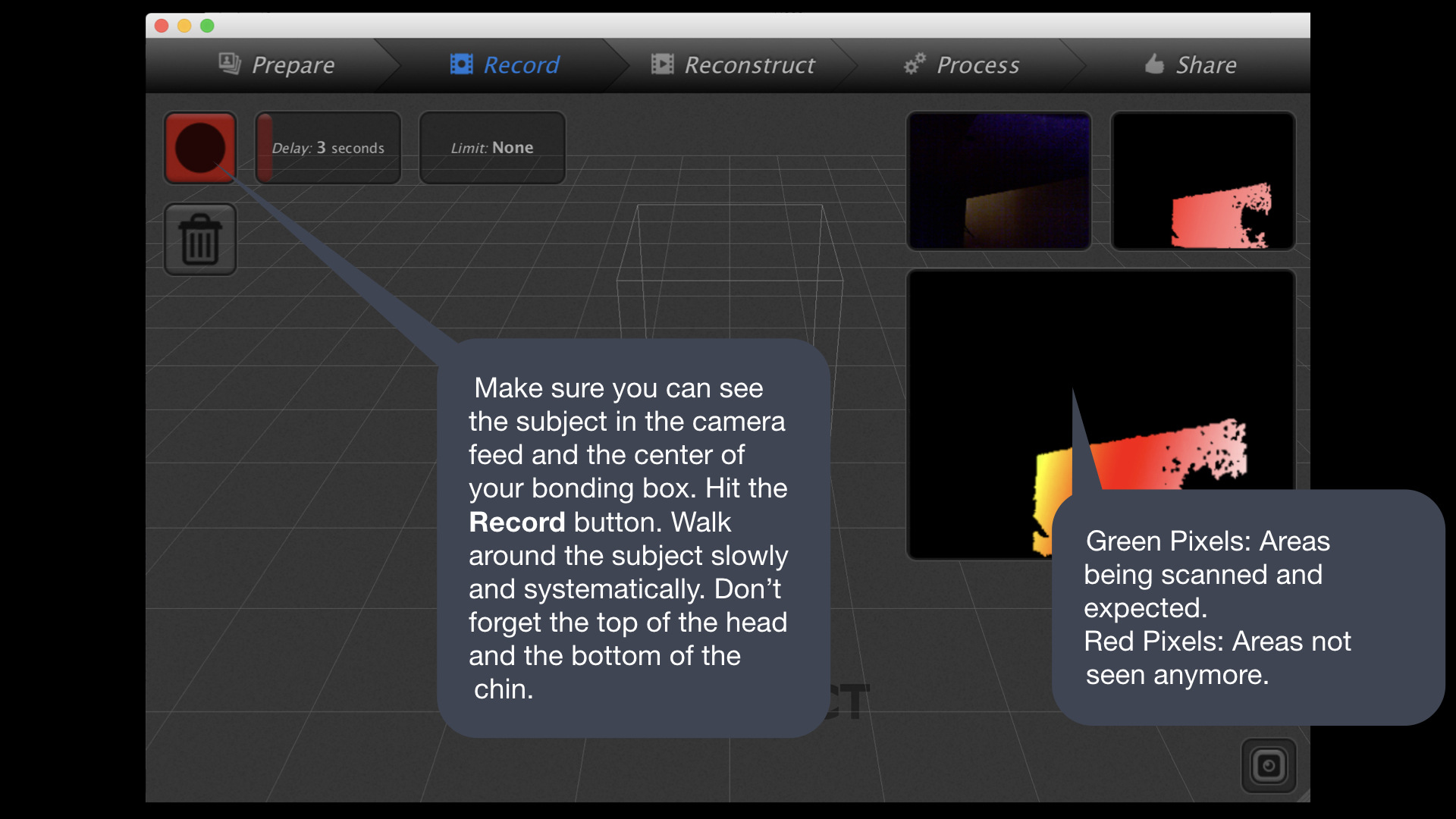

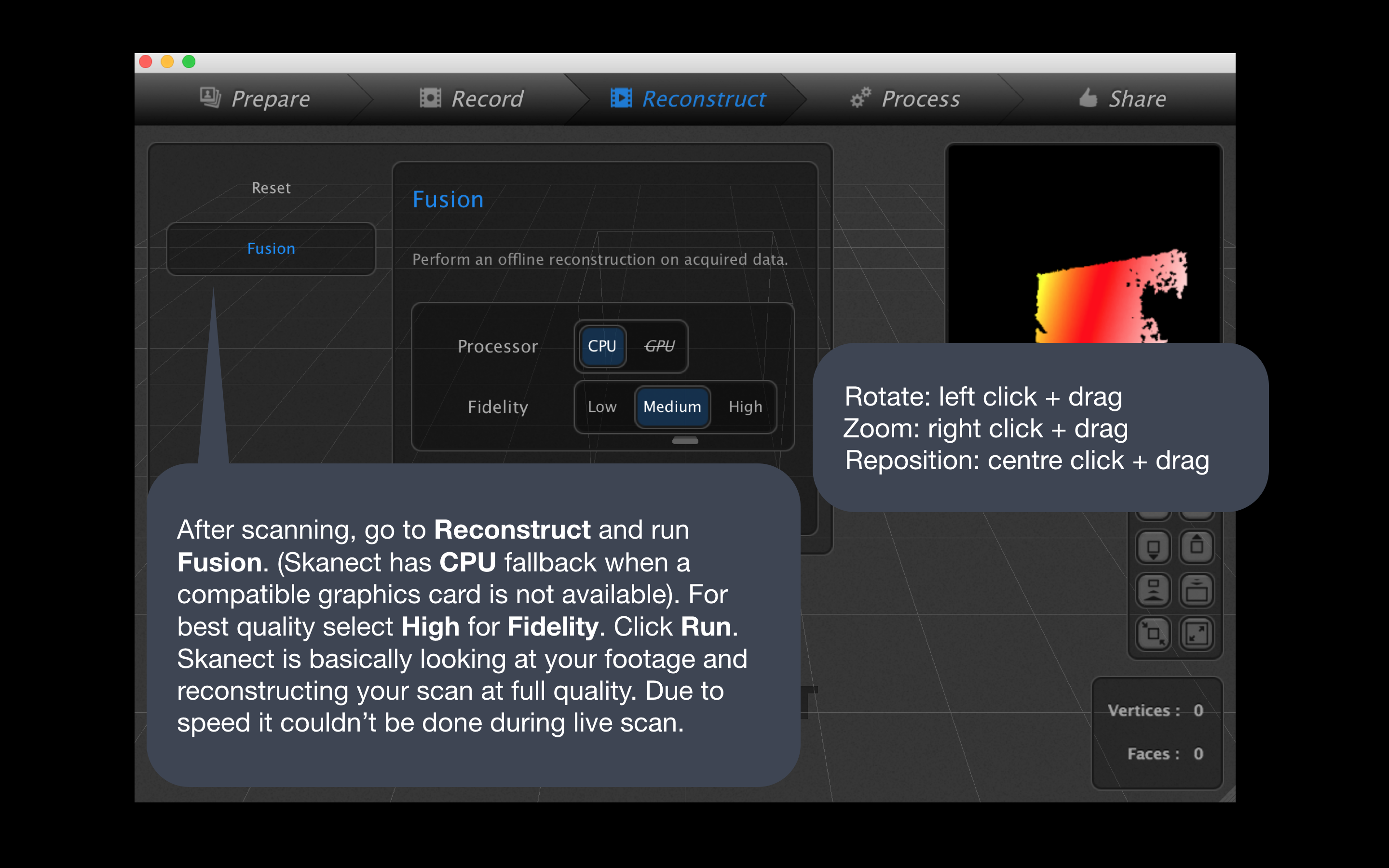

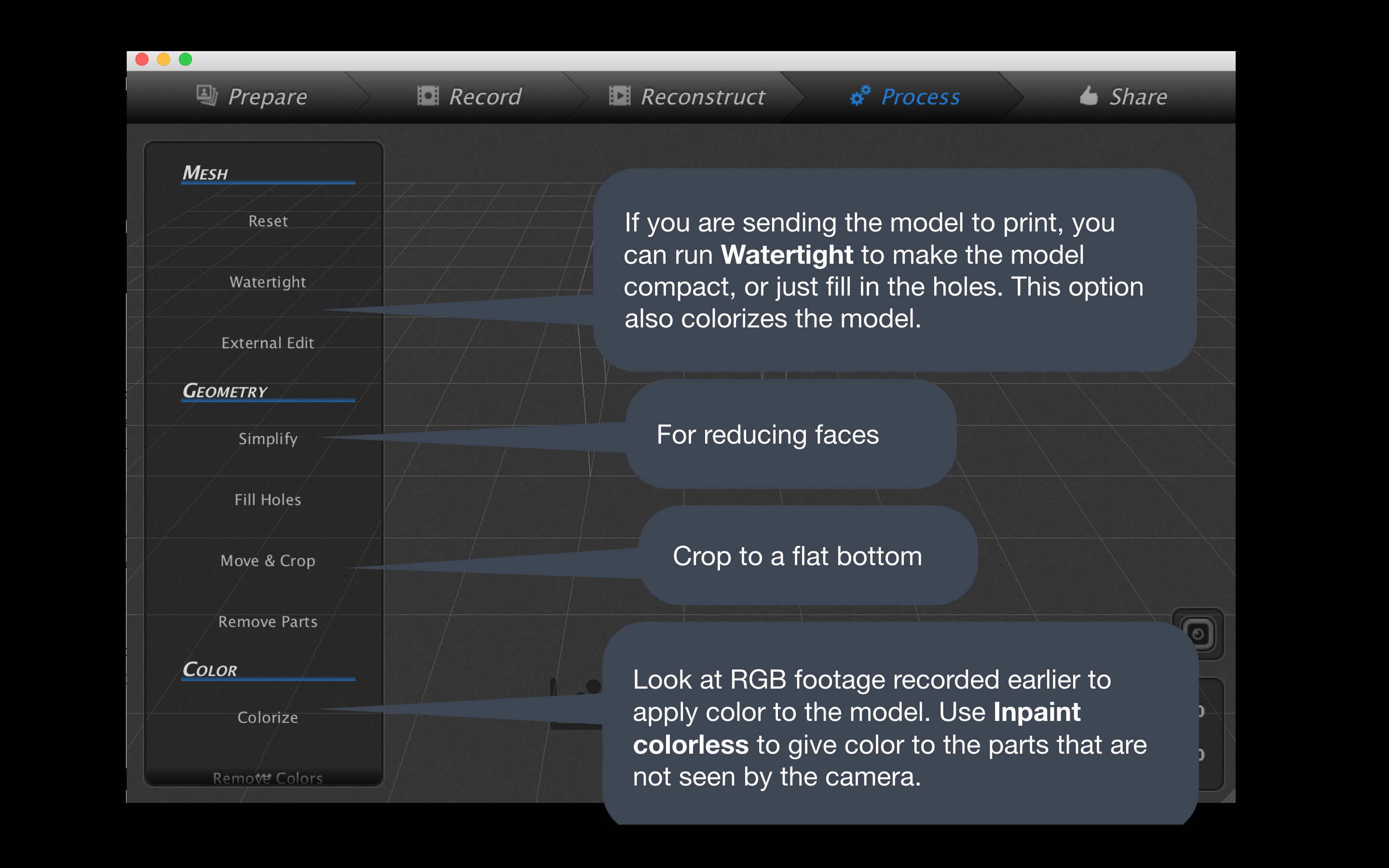

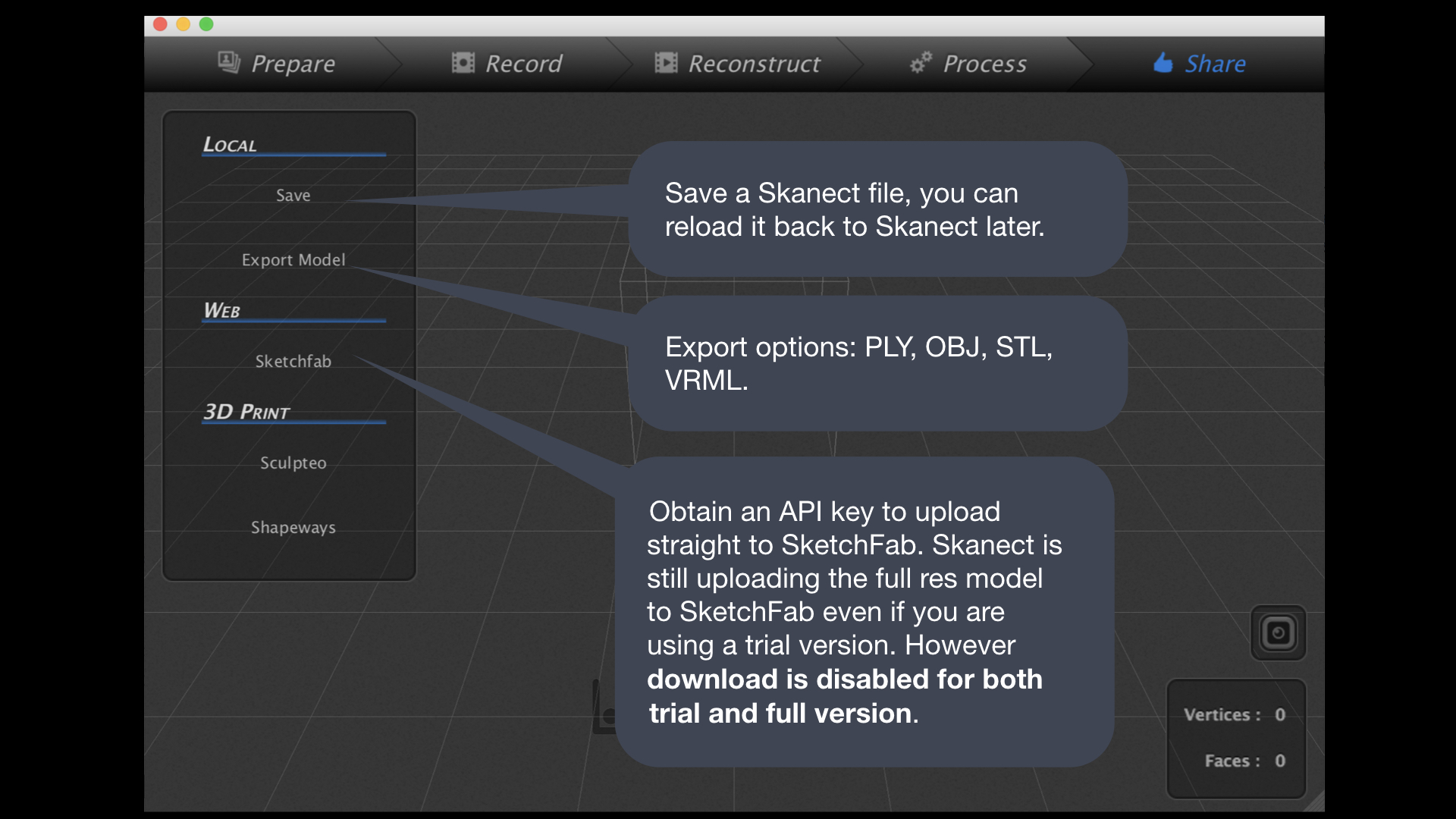

Here are the steps to use the software SKANECT with the hardware Structure Sensor or Kinect.

Feedback Screen

Green Pixels: Areas being scanned and expected.

Red Pixels: Areas not seen anymore.

Green Pixels: Areas being scanned and expected.

Red Pixels: Areas not seen anymore.

Portrait Capture Tips

(1) Pick a well-lit place (avoid direct sunlight as it will interfere with IR).

(2) Have the subject look at a specific spot or close their eyes.

(3) Take off glasses or any reflective objects.

(4) Try to start and end somewhere that’s not the face.

(5) You can walk around a subject in a circular motion, sweeping up and down slowly (think Tai Chi). Or you can have a turnable for the subject to stand on.

(1) Pick a well-lit place (avoid direct sunlight as it will interfere with IR).

(2) Have the subject look at a specific spot or close their eyes.

(3) Take off glasses or any reflective objects.

(4) Try to start and end somewhere that’s not the face.

(5) You can walk around a subject in a circular motion, sweeping up and down slowly (think Tai Chi). Or you can have a turnable for the subject to stand on.

Artist Gabe BC scanning an object.

3D scans from Knowing Together workshop participants: Kinect + SKANECT.

Example scan using Structure Sensor + SKANECT.